Cybersecurity and other data-related issues top the list of risks for heads of audit in 2019; here are key actions audit must take.

The number of cyberattacks continues to increase significantly as threat actors become more sophisticated and diversify their methods. It’s hardly surprisingly, then, that cybersecurity preparedness tops the list of internal audit priorities for 2019.

Other data and IT issues are also on the radar for internal audit, according to the Gartner Audit Plan Hot Spots. Cybersecurity topped the list of 2019’s 12 emerging risks, followed by data governance, third parties and data privacy.

“These risks, or hot spots, are the top-of-mind issues for business leaders who expect heads of internal audit to assess and mitigate them, as well as communicate their impact to organizations and stakeholders,” said Malcolm Murray, VP, Team Manager at Gartner.

What audit can do on cyberpreparedness

The Gartner 2019 Audit Key Risks and Priorities Survey shows that 77% of audit departments definitely plan to cover cybersecurity detection and prevention in audit activities during the next 12-18 months. Only 5% have no such activities planned. And yet, only 53% of audit departments are highly confident in their ability to provide assurance over cybersecurity detection and prevention risks.

Here are some steps audit can take to tackle cybersecurity preparedness:

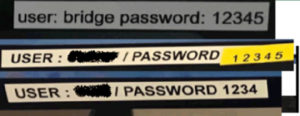

- Review device encryption on all devices, including mobile phones and laptops. Assess password strength and the use of multifactor identification.

- Review access management policies and controls, and set user access and privileges by defined business needs. Swiftly amend access when roles change.

- Review patch management policies, evaluating the average time from patch release to implementation and the frequency of updates. Make sure patches cover IoT devices.

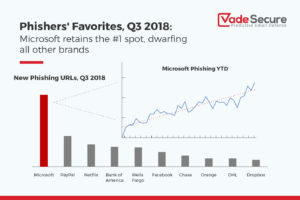

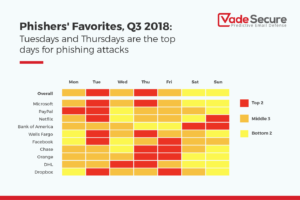

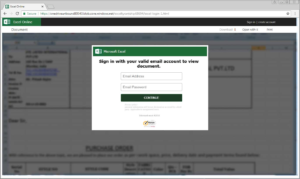

- Evaluate employee security training to ensure that the breadth, frequency and content is effective. Don’t forget to build awareness of common security threats such as phishing.

- Participate in cyber working groups and committees to develop cybersecurity strategy and policies. Help determine how the organization identifies, assesses and mitigates cyberrisk and strengthens current cybersecurity controls.

Data governance

Big data increases the strategic importance of effective mechanisms to collect, use, store and manage organizational data, but many organizations still lack formal data governance frameworks and struggle to establish consistency across the organization. Few scale their programs effectively to meet the growing volume of data. Left unsolved, these governance challenges can lead to operational drag, delayed decision making and unnecessary duplication of efforts.

What audit can do:

- Review the data assets inventory, which must include, at a minimum, the highest-value data assets of the organization. Assess the extent of both structured and unstructured data assets.

- Review the classification of data and associated process and policies. Analyze how data will be retained and destroyed, encryption requirements and whether relevant categories of use have been established.

- Participate in relevant working groups and committees to stay abreast of governance efforts and provide advisory input when frameworks are built.

- Review data analytics training and talent assessments, identify skill gaps and plan how to fill them. Evaluate the content and availability of existing training.

- Review the analytics tools inventory across the organization. Determine if IT has an approved vendor list for analytics tools and what efforts are being made to educate the business on the use of approved tools.

Third parties

Efforts to digitalize systems and processes add new, complex dimensions to third-party challenges that have been a perennial concern for organizations. Nearly 70% of chief audit executives reported third-party risk as one of their top concerns, but organizations still struggle to manage this risk. What audit can do:

- Evaluate scenario analysis for strategic initiatives to analyze potential risks and outcomes associated with interdependent partners in the organization’s business ecosystem. Consider enterprise risk appetite and identify trigger events that would cause the organization to take corrective action.

- Assess third-party contracts and compliance efforts, ensure contracts adequately stipulate information security, data privacy and nth-party requirements. Ensure there is monitoring of third-party adherence to contracts.

- Investigate third-party regulatory requirements, assess how effectively senior management communicates regulatory updates across the business and how clearly it articulates requirements for third parties.

- Evaluate the classification of third-party risk and confirm that the business conducts random checks of third parties to ensure classifications properly account for actual risk levels.

Data privacy

Companies today collect an unprecedented amount of personal information, and the costs of managing and protecting that data are rising. Seventy-seven percent of audit departments say data privacy will definitely be covered in audit activities in the next 12–18 months. What audit can do:

- Review data protection training and ensure that employees at all levels complete the training. Include elements such as how to report a data breach and protocols for data sharing.

- Assess current level of GDPR compliance and identify compliance gaps. Review data privacy policies to make sure the language is clear and customer consent is clearly stated.

- Assess data access and storage. Make sure access to sensitive information is role-based and privileges are properly set and monitored.

- Review data breach response plans. Evaluate how quickly the company identifies a breach and the mechanisms for notifying impacted consumers and regulators.

- Assess data loss protection and review whether tools scan data at rest and in motion.