Key Takeaways

- Threat intelligence is the output of analysis based on identification, collection, and enrichment of relevant data and information.

- Always keep quantifiable business objectives in mind, and avoid producing intelligence “just in case.”

- Threat intelligence falls into two categories. Operational intelligence is produced by computers, whereas strategic intelligence is produced by human analysts.

- The two types of threat intelligence are heavily interdependent, and both rely on a skilled and experienced human analyst to develop and maintain them.

Everybody in the security world knows the term “threat intelligence.” At this point, even some non-security folks have started talking about it.

But it’s still very poorly understood.

Raw data and information is often mislabeled as intelligence, and the process and motives for producing threat intelligence are often misconstrued.

If you’re new to the field, or you think your organization could benefit from a carefully constructed threat intelligence program, here’s what you need to know first.

Defining Threat Intelligence

Although most people believe they intuitively understand the concept, it pays to work from a precise definition of threat intelligence.

Threat intelligence is the output of analysis based on identification, collection, and enrichment of relevant data and information.

As already alluded to, raw data and information do not constitute intelligence. Equally, analyzed data and information will only qualify as intelligence if the result is directly attributable to business goals.

A truly well-planned and executed threat intelligence initiative has the potential to provide enormous benefit to your organization. On the flip side, if you aren’t careful, it’s easy to sink huge amounts of resources into an intelligence program without really achieving anything.

It would be foolish, then, to invest heavily in threat intelligence without having a clear idea of what you’re trying to achieve and why.

Simply “keeping the business secure” is not a valid motive for threat intelligence, but it’s the only driver for many organizations. The issue here is that as a goal it’s spectacularly generic, and almost impossible to measure.

A threat intelligence program with this motive is at serious risk of failing to identify what is and isn’t relevant or important.

A much better business goal, which is both relevant and tangible, would be to reduce operational risk by a given margin within a specified time period. Operational risk is a regularly measured and monitored business metric, and the results (however they’re derived) are there for all to see.

As a result, a threat intelligence program designed to reduce operational risk will be far more focused on those aspects of security that can be clearly linked to the markers used to measure cyber risk. As an example, intelligence relating to recent attacks on similar organizations within the same industry would be highly relevant, whereas analysis of the most recent high-profile attack in a totally different industry would not.

Intelligence Typologies

Perhaps the single most important phase of the whole process is analysis. During this phase, large quantities of raw data and information are processed into relevant, actionable intelligence.

But the actual analysis process can vary enormously depending on the desired output. Largely speaking, depending on the form of analysis used to produce it, threat intelligence falls into two categories: operational and strategic.

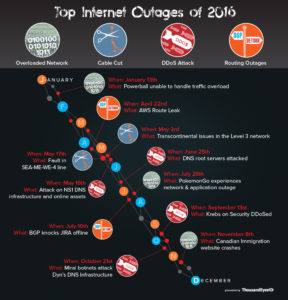

Operational intelligence is produced entirely by computers, from data identification and collection through to enrichment and analysis. A common example of operational threat intelligence is the automatic detection of distributed denial of service (DDoS) attacks, whereby a comparison between indicators of compromise (IOCs) and network telemetry is used to identify attacks much more quickly than a human analyst could.

Strategic intelligence focuses on the much more difficult and cumbersome process of identifying and analyzing threats to an organization’s core assets, including employees, customers, infrastructure, applications, and vendors. To achieve this, highly skilled human analysts are required to develop external relationships and proprietary information sources; identify trends; educate employees and customers; study attacker tactics, techniques, and procedures (TTPs); and ultimately, make the defensive architecture recommendations necessary to combat identified threats.

A common example of strategic intelligence is the use of threat actor TTPs to inform proactive security measures such as enhanced vulnerability and patch management or comprehensive security awareness training.

And it’s natural at this stage to wonder …

Which Is Better?

This question is problematic for two reasons.

First, it’s the natural question to ask when presented with two options, and second, it totally misses the point.

The reality of threat intelligence is that both operational and strategic intelligence are required. More than that, though, they actively rely on each other.

For a start, the fact that the end-to-end process for producing operational intelligence involves no human analysts is misleading. As Levi Gundert points out in his threat intelligence white paper, achieving an automated operational workflow is highly dependent on the presence of at least one talented and experienced data architect. This person is responsible for designing, creating, and calibrating tools that are capable of performing this vital intelligence function.

And the only reason that any analysts are available to produce strategic intelligence is because the operational “heavy lifting” is being done automatically by computers. If that weren’t the case, intelligence analysts would be totally bogged down with detail and false positives.

If this is starting to seem like a “chicken-and-egg” situation, let us help you out.

To build a world-class threat intelligence capability, the first thing you’ll need is at least one highly skilled and experienced human analyst. Once a person or team with the right skillset is in place, they will need to move through three stages:

- Develop or procure the systems needed to automate the identification, collection, and enrichment of threat data and information.

- Create and maintain the tools needed to produce operational threat intelligence.

- Focus their attentions on the production of highly targeted and valuable strategic intelligence.

Sadly, many organizations never make it past stage one. Once they have an intelligence feed in place, they take action to mitigate the most basic threats using simple information such as IOCs and vulnerability announcements, and never progress to a level that would enable them to address real business needs and objectives.

If your threat intelligence capability is stuck at this level, you’re leaving a huge proportion of the business value of your threat intelligence feed on the table.

Don’t Settle, and Don’t Get Lost in the Woods

So far in this article, we’ve presented two clear and major dangers of developing a threat intelligence capability:

- Settling for simple threat data and information, instead of fighting for intelligence.

- Wasting valuable time and resources on producing intelligence that doesn’t further business goals.

To avoid these mistakes, you’ll need to keep pushing your analysts for more and better intelligence, while also stressing the importance of keeping things relevant.

Losing sight of either of these fundamental considerations can undermine the value of your program. Keep them at the forefront, though, and over time you’ll develop a truly world-class threat intelligence capability.

But a general ledger has to be trusted, and all of this is held digitally. How can we be sure that the blockchain stays intact, and is never tampered with? This is where the miners come in.

But a general ledger has to be trusted, and all of this is held digitally. How can we be sure that the blockchain stays intact, and is never tampered with? This is where the miners come in.